In the beginning, there was only the Monolith. The monolith is one of the oldest software architecture styles, used for decades. After all, we had one file per application, and until the 1990s, we didn’t have

applications composed of other applications, so there wasn’t a need for any other type of architecture.

What is the Monolithic Architecture?

A monolithic architecture is the traditional unified model for designing an application. Monolithic software is intended to be a single, self-contained unit. The application’s components are tightly coupled, and each part can communicate with any other part directly. Usually, a monolithic application will exist as a single codebase being modified by multiple teams within an organization and deployed as a single unit containing all the functionality that those teams maintain.

Monoliths Through the Ages

The monolithic architecture appeared in the early days of computing. The applications built in the Early Computing Era were often monolithic, due to the limited resources and technology available at the time. While most of the languages of that era supported multi-file applications, the modules created had to do with more of a logical separation of a set of methods for readability, rather than splitting an application into multiple components.

As we enter the early 1960s we enter the Mainframe Era. During this time, the monolithic architecture still prevailed. Despite the increased computational resources provided by mainframes, even the most powerful systems such as the IBM System/360, a beast during its prime, still had extremely limited resources compared to modern systems. Monolithic architectures were more efficient to run since they utilized the available resources optimally. Also in the 1960s, the concept of multiple threads was not present. The term “thread” as we use it today, refers to a lightweight unit of execution within a process. Systems of the time relied more on batch processing, meaning that a sequence of programs would be executed one after another. Monoliths are designed to be single-threaded applications and this architecture would fit perfectly in the systems of the time.

In the 1980s we enter the Client-Server Era. Despite the rise of client-server computing and the first scalability issues appearing, monolithic architecture still dominates the architectural scene. Many organizations have already invested in monolithic applications in the past and so it was easier to create a client application rather than rewriting the whole application with newer and shinier distributed architectures. Switching to distributed architectures required significant effort, and remember that the Internet as we know it today wasn’t around, so the benefits weren’t apparent. Moreover, the technology that made modern distributed architectures so prevalent, was not yet developed.

As we enter the 1990s and the 2000s, we enter the Web Application Era. During this time the Internet as we know it today starts to take form. And despite all odds, our old friend the monolith continues to hold strong. The single-codebase approach made it easier to develop and deploy applications, especially for smaller projects. Also, there were a lot of brownfield projects around. Many organizations were very hesitant to change their legacy systems. Even today, most legacy systems are just giant balls of mud and spaghetti code. These systems are complex, tightly integrated, and difficult to migrate. Since the scaling requirements are not that great, it was considered more cost-efficient to maintain and enhance the applications instead of attempting a re-architecture. Moreover, a monolith can be developed and deployed much faster than a distributed application. Remember that this period is the boom of dot-com companies, and the dot-com bubble burst hasn’t occurred yet.

As we enter the Microservice Era, the monolith started to become more and more cumbersome. As applications grew in complexity and the requests increased, monolithic architectures started to fall behind. Scaling the entire application horizontally isn’t the best solution for all scalability issues, and a transition to a purely distributed application started.

Are monoliths still relevant?

Understanding how a monolithic architecture is designed and implemented is still important in the age of microservices. Firstly, many organizations still rely on monoliths as part of their application stack. I have worked on two quite large companies in the last 4 years that still use monolithic architectures for their core application. You might be surprised at how much some organizations are to alter their old reliable monolithic workhorses. Understanding the monolithic architecture will allow you to effectively work with old, fragile legacy systems.

Also, keep in mind that not all applications require the same level of scalability as Netflix, and a microservice design is not for everyone. Furthermore, if you look around the internet these days, you will find a growing tendency of ditching microservices in favour of monolith structures. There is no such thing as a universal solution; each product has its unique set of requirements and challenges, and fitting it into the most common design is a recipe for disaster.

Advantages of the Monolithic Architecture

Simplicity

A monolithic application is inherently simple. The entire application is developed, deployed and managed as a single unit.

First of all, the monolithic architecture fosters developer collaboration. Developers may understand the system and its interdependencies by integrating all components under a shared codebase. This single codebase helps team members collaborate and navigate the code. It reduces interservice interactions, simplifying development. All components working in the same process improves communication. Developers can use the necessary functions and data without network communication or inter-service protocols. This streamlined strategy speeds development and reduces inter-service communication mistakes.

Moreover, by tightly linking components, monolithic architecture simplifies testing. It’s easier to develop whole application test suites. This unified testing strategy lets us quickly find and fix data flow, functionality, and integration issues. Developers can track application execution within a single codebase, making debugging easier.

Finally, monolithic application deployment is simple. No service or container deployment coordination is needed since all components are packaged. This simplifies distributed system management and deployment. In case of errors, the entire program may be rolled back, making rollback and version management easier.

Cost Effectiveness

For small applications or organizations with limited resources, a monolithic design can save money. Infrastructure savings are a major benefit. In contrast to distributed systems, the entire application is deployed as a single unit, so service discovery, load balancers, and API gateways are unnecessary. Monolithic architecture is cost-effective, especially for applications with minimal traffic or resource needs, due to reduced infrastructure complexity.

It also simplifies operations and reduces infrastructure expenses. Single-codebase deployment and maintenance save operational overhead and costs. No need to independently deploy, manage, and extend many services or containers streamlines operations and minimizes operational knowledge. The application’s components are tightly integrated, simplifying monitoring and logging enabling centralized monitoring and unified logs. For small organizations or development teams, these operating cost savings might be significant.

Consistency

Monolithic architecture encourages consistency throughout the application, which can improve the user experience and development process overall.

All application components are written and tested together in a single codebase in a monolithic design. This unified development approach guarantees coding standards, architectural patterns, and development techniques are consistent. Developers working on various portions of the program adhere to the same set of principles and conventions, resulting in a unified codebase. Consistency in development techniques facilitates collaboration, minimizes the likelihood of inconsistencies or conflicts, and improves code maintainability.

The seamless integration of components is another feature of consistency in monolithic design. Because all of the components are part of the same application, they may communicate with one another without the use of sophisticated communication techniques. This close integration enables efficient data sharing and communication between components, lowering the overhead of data transformation and API interactions in distributed systems. As a result, the user experience is improved because the application has a single interface and consistent behaviour across different functionalities.

Finally, due to the consistency produced by tightly connected components, the maintenance of a monolithic application becomes more efficient. Making modifications or introducing new features with a single codebase may be easier than managing several dispersed services. Developers can gain a comprehensive perspective of the entire system, allowing them to more effectively identify and address possible faults. This consistency in maintenance techniques decreases the time and effort necessary for maintenance operations while also improving the application’s general stability and reliability.

Robustness

One of the benefits of monolithic architecture is the ability to assure robustness through tightly coupled components. They may communicate and operate efficiently by integrating all of the components into a single codebase. This close integration allows for more effective communication and data exchange while reducing performance bottlenecks and potential concerns connected with inter-service communication in distributed systems. Direct method calls and shared memory space within a monolithic design result in faster and more efficient data transfers, lowering latency and improving overall program performance.

Furthermore, monolithic architecture’s strong coupling simplifies error handling and improves fault tolerance. Failures or mistakes that arise within the system can be more easily managed within a single codebase. When an error occurs in one component, it can be treated in the same context, allowing for quicker error detection, recovery, and resilience. Robust error-handling mechanisms can be deployed systematically throughout the program, ensuring uniform error management procedures and lowering the likelihood of error propagation across different services or components.

The simplicity of deployment and scalability provided by monolithic architecture also contributes to robustness. Because the entire application is deployed as a cohesive entity, controlling its deployment and scaling is easier than managing dispersed systems with numerous services. Scaling a monolithic application usually entails replicating the entire application, which is accomplished by giving more resources to the existing infrastructure. This basic scaling technique simplifies resource management and avoids the difficulties associated with scaling individual components independently.

Finally, the simplicity of monolithic architecture contributes to the system’s overall robustness. Developers get a full grasp of the entire application with a single codebase, making it easier to identify and address possible issues. This holistic perspective allows for more effective monitoring, debugging, and troubleshooting, lowering the time and effort required to fix problems. The simplicity of the architecture also simplifies testing because all components can be tested concurrently, ensuring extensive test coverage and minimizing integration-related concerns.

Disadvantages of the Monolith Architecture

Scalability

The limited granularity of scalability is one of the key issues in scaling monolithic structures. All components in such architectures are tightly integrated and deployed as a single unit. As a result, growing the program entails replicating the complete application rather than scaling certain components independently. Because of the lack of granularity, it is impossible to tailor resource allocation to the exact needs of individual components or services. As a result, inefficient resource consumption may occur, limiting the system’s ability to handle variable levels of load across different areas of the application.

Another barrier is the monolithic architecture’s scaling limits. Because all components are part of the same program, scaling requires scaling the entire application. This can be time-consuming and impair the system’s ability to handle high traffic or higher workloads. Scaling a monolithic program frequently necessitates extensive hardware resources, resulting in greater infrastructure expenses. Furthermore, there may be a point at which scaling the entire program becomes difficult or financially unfeasible. This scalability limitation can make it difficult to fulfil rising demand or accommodate unexpected traffic spikes.

Finally, in monolithic designs, scalability difficulties extend to the development and deployment processes. Introducing updates or developing new features in a closely connected architecture might be difficult. Managing and coordinating development activities across various teams or developers gets increasingly difficult as the program grows larger and more complex. The interdependence of several components may necessitate substantial coordination and testing, thereby slowing down the development process. Similarly, releasing updates or new versions of a monolithic program frequently necessitates deploying the entire application, increasing the risk of failures or disruptions during the deployment process.

Maintainability

The complexity of the coding is one of the key maintainability issues with monolithic designs. The codebase may swell and get complex as the program develops and additional features are added. Understanding the relationships and interactions between various sections of the program can be challenging because all components are intricately entwined within a single codebase. Because of its complexity, the codebase is more difficult to maintain and modify and is therefore more likely to contain errors or other problems when modifications or new features are made. It can also be difficult to maintain a modular and reusable codebase when components are not clearly separated from one another.

Monolithic architectures may also experience longer release cycles and slower development cycles. Since all components are created and delivered simultaneously, any modifications or updates to the application necessitate thorough system testing and validation. It can take a while to thoroughly test a monolithic application, especially when modifications to one component may have unforeseen effects on other system components. It may take longer to provide new features or bug patches as a result of the prolonged testing process, which makes it more challenging to react quickly to user needs or market demands.

The absence of isolation between components in monolithic architectures is another barrier to maintainability. A flaw or defect in one component of a monolithic application could possibly affect the whole thing. It is more difficult to identify and contain problems due to the strong coupling between components because a problem in one element of the program may spread to other sections. This lack of separation might make it more difficult to identify the source of the issue and implement specific changes, which can make debugging and troubleshooting less successful. Additionally, it could be more challenging to undo changes or go back to a previous stable version in case of problems due to the common codebase and interdependencies between components.

Monolithic architectures may make initial development and deployment simpler, but as the program grows and scales, the maintainability issues can become more serious. Microservices and other distributed architectures offer better alternatives for maintainability because they allow for the independent creation and upkeep of individual services. Clear divisions between components are made possible by microservices, which enhances the modularity, scalability, and maintainability of the code. However, because it involves architectural changes and adds more complexity to managing distributed systems, moving from a monolithic architecture to a microservices architecture calls for careful planning and study.

Deployability

Monolithic designs display several deployability issues, one of which is the complexity and size of the deployment unit. A monolithic application requires the deployment of the complete codebase because all components are tightly connected and distributed as a single entity. Larger deployment packages may emerge from this, which would take more time and resources to deploy. Larger applications take longer to deploy, which might make it more difficult to release updates or new features on schedule. Furthermore, because a single problem might have an effect on the entire system, delivering the complete monolithic program raises the chance of mistakes or disruptions during the deployment process.

Furthermore, because of the interdependencies between many components, distributing updates or new versions of a monolithic application can be difficult. To make sure that a change doesn’t have unintended effects elsewhere, it is frequently necessary to thoroughly test and validate the entire application after making a change to one component. It may take a while to thoroughly test the full monolithic application, which could cause a delay in the deployment process. It can be challenging to coordinate and synchronize the deployment of numerous components inside a monolithic design, especially when there are dependencies and linkages between various system components.

Lack of flexibility in deployment options is another element of deployability in monolithic architectures. A homogenous infrastructure where all components can run together is often needed when deploying a monolithic application because all components are strongly coupled. The application’s scalability and deployment options are constrained by this constraint. For instance, it might not be possible to deploy a particular application component independently without also deploying the entire monolith if that component needs greater computational power or specialized infrastructure. The adoption of diverse deployment tactics like containerization or serverless computing may be hindered by this lack of flexibility in deployment options, which can also hinder resource optimization.

While monolithic architectures make development and initial deployment simple, the difficulties with deployability increase as an application gets bigger and more sophisticated. Microservices and other distributed architectures offer better alternatives for deployability since they allow for the independent development and deployment of individual services. Microservices allow for autonomous service scalability, deployment, and rollback, giving the deployment process more fine-grained control and flexibility. However, because it entails architectural modifications and more difficult distributed system management, switching from a monolithic architecture to a microservices architecture calls for careful planning and study.

Scaling Monolithic Architectures

Contrary to popular belief, monolithic applications can be scaled multi-dimensionally. Most microservice aficionados will claim that “Scaling the application can be difficult – a monolithic architecture is that it can only scale in one dimension.” First of all, this statement is a gross misunderstanding of monolithic architecture. Nothing is ever this cut and dry. There are several ways to approach horizontal scaling, and microservices do not own the patent on this process.

Also, this is the exact way of thinking that gets us ready to use the most popular coding technique even though we don’t know what each one is for. Misconceptions like that often lead to people mindlessly following the latest trend and self-proclaimed tech gurus encouraging people to shame engineers for their decisions. After all, if your only tool is a hammer, all problems start to look like nails.

Vertical Scaling

Vertical scaling is the most obvious solution for scaling an application. Vertical scaling involves upgrading the CPUs, adding more memory, expanding storage capacity, or improving network bandwidth. Instead of increasing the number of servers available, the capabilities of the available servers are enhanced.

Scaling vertically is rather simple. It typically requires minimal changes to the existing application. It is also more cost-effective to upgrade a single server than to manage and maintain multiple servers.

Horizontal Scaling

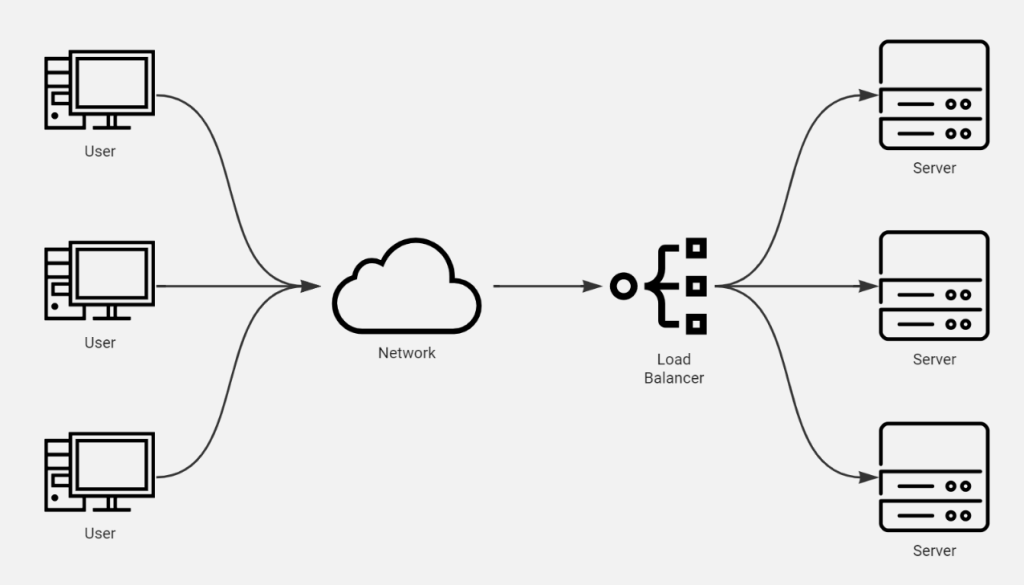

Horizontal scaling involves adding multiple instances of an application to handle increased workload or traffic. In the case of monolithic applications, horizontal scaling requires deploying multiple copies of the monolith and distributing the requests across these instances using a load balancer.

A load balancer acts as a traffic cop. It receives incoming requests and forwards them to different instances of the monolithic application. The balancer also makes sure that the workload is as evenly distributed as possible across the instances.

When deploying these instances, it’s important to consider their geographical dispersion according to your specific requirements. By distributing the instances across different servers or locations, you can enhance the resilience of your system. In the event of a server or regional failure, the load balancer can detect it through health checks. It will then redirect incoming requests to the remaining healthy instances, ensuring uninterrupted service.

Containerization can be used to scale your application if it is compatible. You may automate the deployment, management, and scaling of your application instances by containerizing your application and employing DevOps principles and orchestration technologies such as Kubernetes (K8s). Kubernetes includes capabilities like auto-scaling, load balancing, and efficient resource allocation, which enable your application to dynamically adapt to changing workload demands.

Caching

Caching can improve the availability of a monolithic application significantly. When the application serves requests for the same data from the cache instead of getting to the backend services, response times are cut by a lot. Caching makes it easier for an application to handle more traffic and grow by reducing the number of back-end processing and I/O tasks that need to be done. Also, by caching results that require a lot of processing power or complicated data transformations, the single application can avoid doing the same work twice. This optimization helps improve the application’s general performance and lets it handle more requests, making it easier to scale.

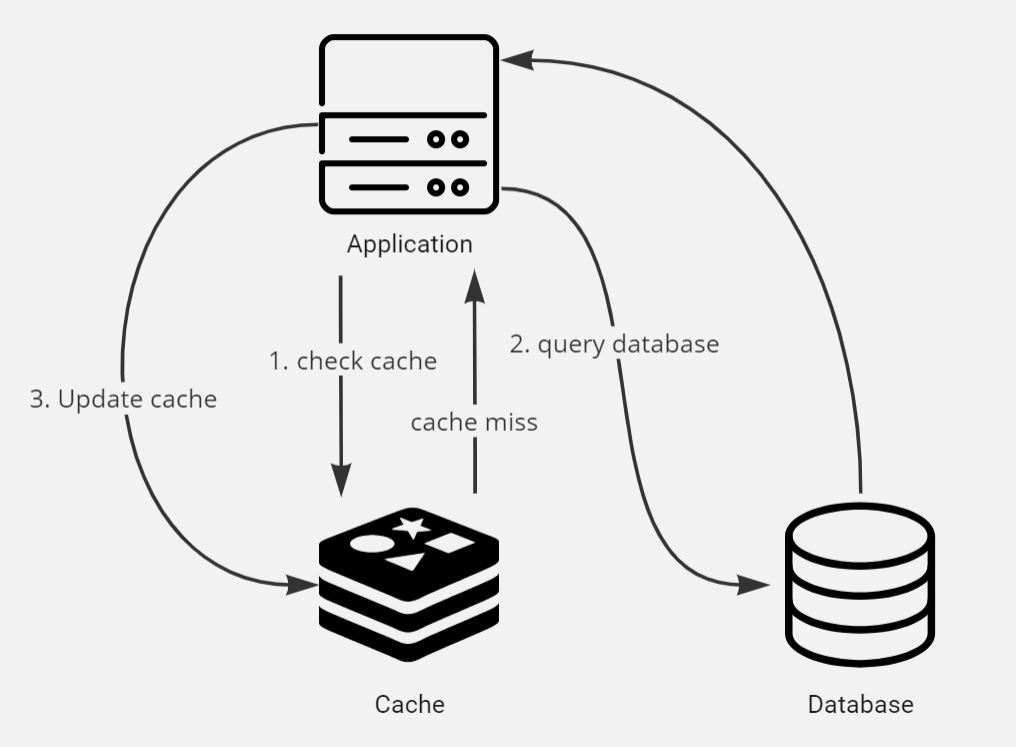

There are many different caching strategies to use, but let’s use the Cache Aside strategy as an example since it is the most commonly used one.

First, the application should look in the cache to see if the needed information is there. If the application can’t find the data in the cache, it should get it from the “slower” storage backend and add it to the cache. This method helps improve speed by reducing the number of times that data needs to be pulled from the slower backend.

Second, it’s very important to set up the cache with the right time-to-live (TTL) number for storing data. The TTL tells the cache how long it can keep the data before it goes bad and needs to be updated. By setting a reasonable TTL, the cache can handle memory overhead efficiently by automatically releasing cached values that are no longer needed.

Caches come in different forms and can improve speed by a lot, especially when the same values are read over and over again. By caching data that is often read, the data can be served straight from the cache instead of having to be retrieved from the slower backend. This cuts response times by a lot and makes the service work better overall.

It’s important to note that replying to the request and adding data to the cache (when the cache is empty) can happen at the same time. This means that the program can get the data from the slower backend and store it in the cache while it is processing the request. Parallel processing helps improve speed and reduces the chance that a request will be served late.

Pain Points of Scaling a Monolith

When you look at the problems and pain points of monolithic apps, microservices start to look like a good option. There is a limit to how scaled a monolith can get before it starts to cause problems. Not all of these problems are unsolvable, though, and microservices are only one solution that may not work for every application.

For example, if you’re using a monolithic program, you’re probably stuck in a cycle of “deploy-all-the-things” whenever a new release is ready. Full program deploys are complicated, and if there are bugs in a release, they can cause a lot of trouble.

Planning for large rollbacks in case of failure can take almost as much time as making the changes for a release, and rollback plans are often incomplete and haven’t been tried. To prepare for rolling back monolithic applications, you need to make rollback scripts for the database, take snapshots of the application servers (if they’re running on a virtual machine), make copies of the previous application release, and make sure all the relevant teams are working together.

A fully-coupled application design makes it hard to roll back deployments. No single piece of utility, no matter how small, can be put into place without the rest.

Heavily Interdependent Structure

Since a monolith is a single, self-contained application, it usually depends on a lot of other applications. As the number of variables in an app goes up, so does the chance that it will have bugs and security holes. Most of the source code in your app probably comes from these third-party dependencies. This means that bugs and security risks are more likely to happen. Because it’s a single-piece program, any third-party libraries that are included are used by the whole application. It’s important to understand how widespread this dependency problem is because it means that you can’t separate that third-party library from the program, even if it’s only used by one piece of code.

Because monoliths have such a high dependency overhead, even libraries that are only used in the smallest ways can cause problems that require redeploying the whole service when patches are made. Because dependencies cause a lot of coupling, teams will try to update all dependencies at the same time. But if the program doesn’t have the right unit, integration, and regression tests, this will cause more bugs and security holes.

It’s not ideal to have a heavy dependency structure that requires re-deploying the whole application. This is probably one of the biggest security risks for a monolithic application because teams won’t update or patch dependencies, even if they have security holes.

Ripple Effect

With every change to the code, there is a high chance that a bug or several bugs will be added to an application. This is related to managing dependencies. This is not a new way to introduce bugs, but it’s important to know that the more tightly coupled and unified an application is, the more likely it is that a newly introduced bug will cause problems throughout the application. This means that as changes are made to a monolithic application, the amount of technical debt grows dramatically, and it is unlikely that even the best test suites will find all of the problems before a new release.

Downtime During Deployment

Because monolithic apps are usually launched all at once as a single app, they need to be taken offline to release. Along with the need for downtime, the application only has one version. Yes, different libraries can have different versions, but to upgrade a version of a library, the whole program must be redeployed. While we can use rolling updates, this isn’t always feasible.

Conclusion

In this article, we discussed what is a monolithic architecture, its advantages and disadvantages, scaling possibilities and pain points.

Monolithic architecture is a traditional way of making software in which an application is made as a single, connected unit. Its benefits include being easy to use, cost-effective, consistent, and strong. But scalability, maintenance, and deployment can be hard with monolithic systems.

You can use horizontal scaling or a load balancer to send traffic to different instances of a monolithic design to make it bigger. Even though this method can help handle more work, it may have problems like scaling bottlenecks and make it hard to separate and scale each component separately.

The article also talked about the problems with scaling a monolithic architecture, like how hard it is to deal with rising complexity, how little flexibility there is, and how deployments could cause downtime. When scalability and agility are important, these pain points show how important it is to carefully consider other architectural methods like microservices.

Should we abandon the monolith?

If your application works well with the number of users you have now and you still have room to grow vertically, it probably doesn’t make sense to re-architect and rebuild it right now. In other words, you are probably doing fine if your application deployments are easy and not very thrilling and you have a good handle on technical debt and bug overhead.

If you think that your application will grow faster than you can scale it in the next 12 to 18 months, then the answer is yes. Still, you should take things slowly. Find separate pieces of features in your app and separate them.

The main difference between Service Oriented Architecture and Microservice Architecture is how many responsibilities each process has. So, if you have a point-of-sale program, make the part that manages inventory into a separate service. Then go to the next responsibility. Bigger parts can always be broken up into smaller ones later.

When switching from a monolithic architecture to a microservice or service-oriented design, it’s easy to go too far. Both have their good points, and it’s just as important to know where to stop as to know where to start. If there are too many services to manage, you should think about reducing the number of microservices in your app. If there are too many dependencies on one application and you can’t scale it or it’s hard to launch it, you might want to split it up.

At the end of the day, a monolithic architecture, a service-oriented architecture and a microservice architecture are just tools in your toolbox. Select the tools based on the problem, and not on the latest trend.

I hope that you found worth in this article and I hope to see you again.